|

I am a visiting Robotics scholar at Princeton's Embodied Computation Lab and ARG. I received my M.S. in Mechanical Engineering at UC Berkeley, working with Prof. Mark Mueller at the High Performance Robotics Lab (HiPeRLab). Previously, I studied Aeronautics and Astronautics at Purdue University, where I had the privilege of working with Prof. Shirley Dyke and Prof. David Cappelleri at the NASA-funded RETH Institute. My research interests include robotics and control, with applications in hardware design, distributed and collaborative multi-agent systems, human-robot interaction, and contact-rich manipulation with learning-based approaches.

|

|

Publisher |

Abstract

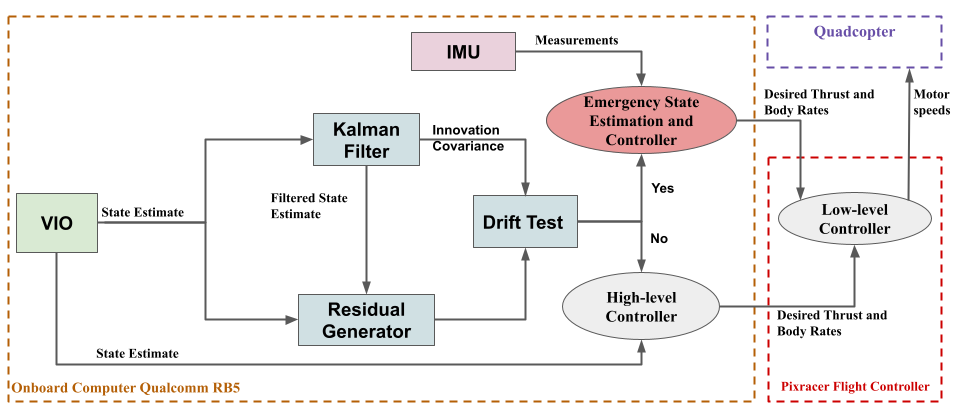

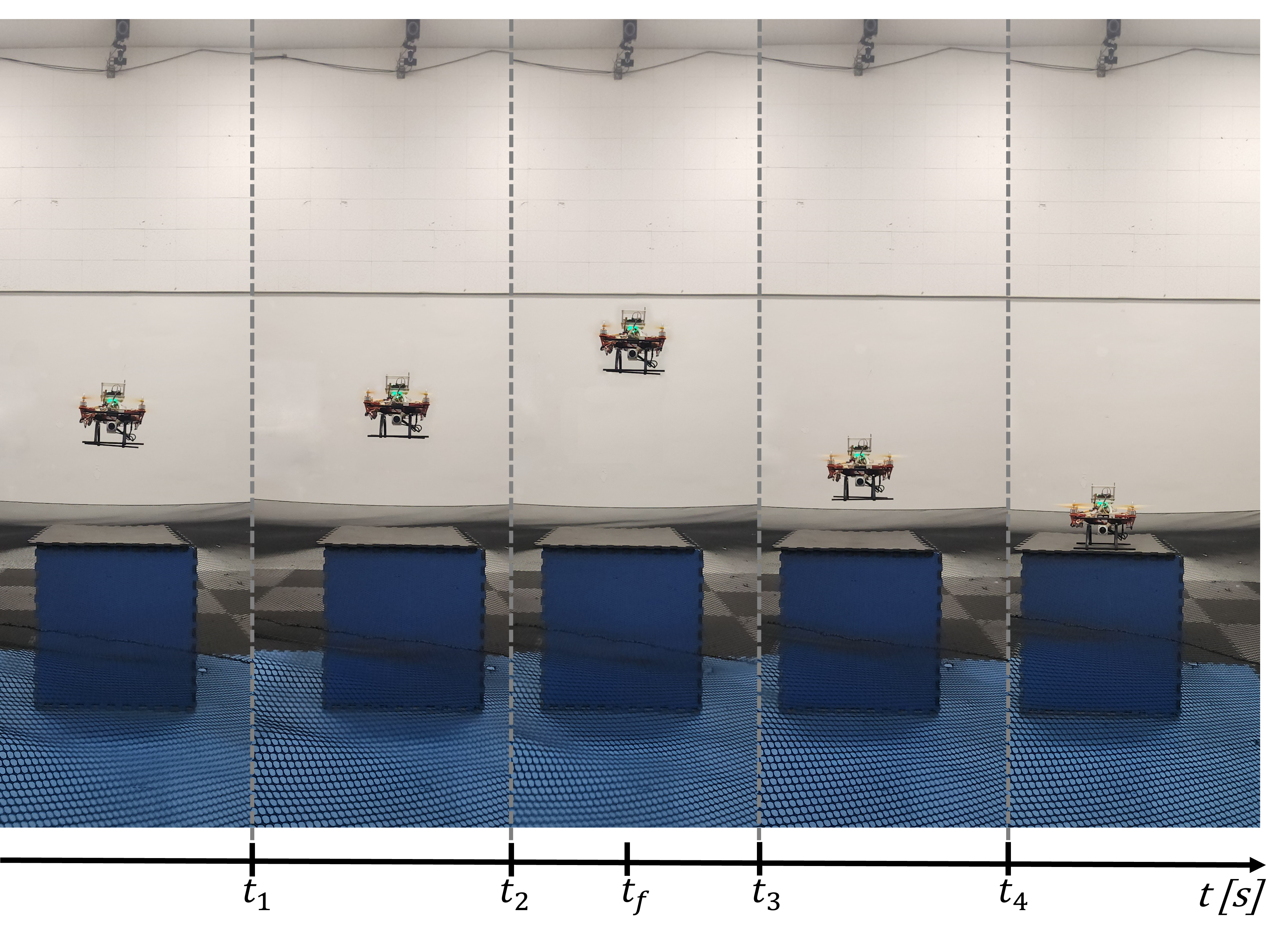

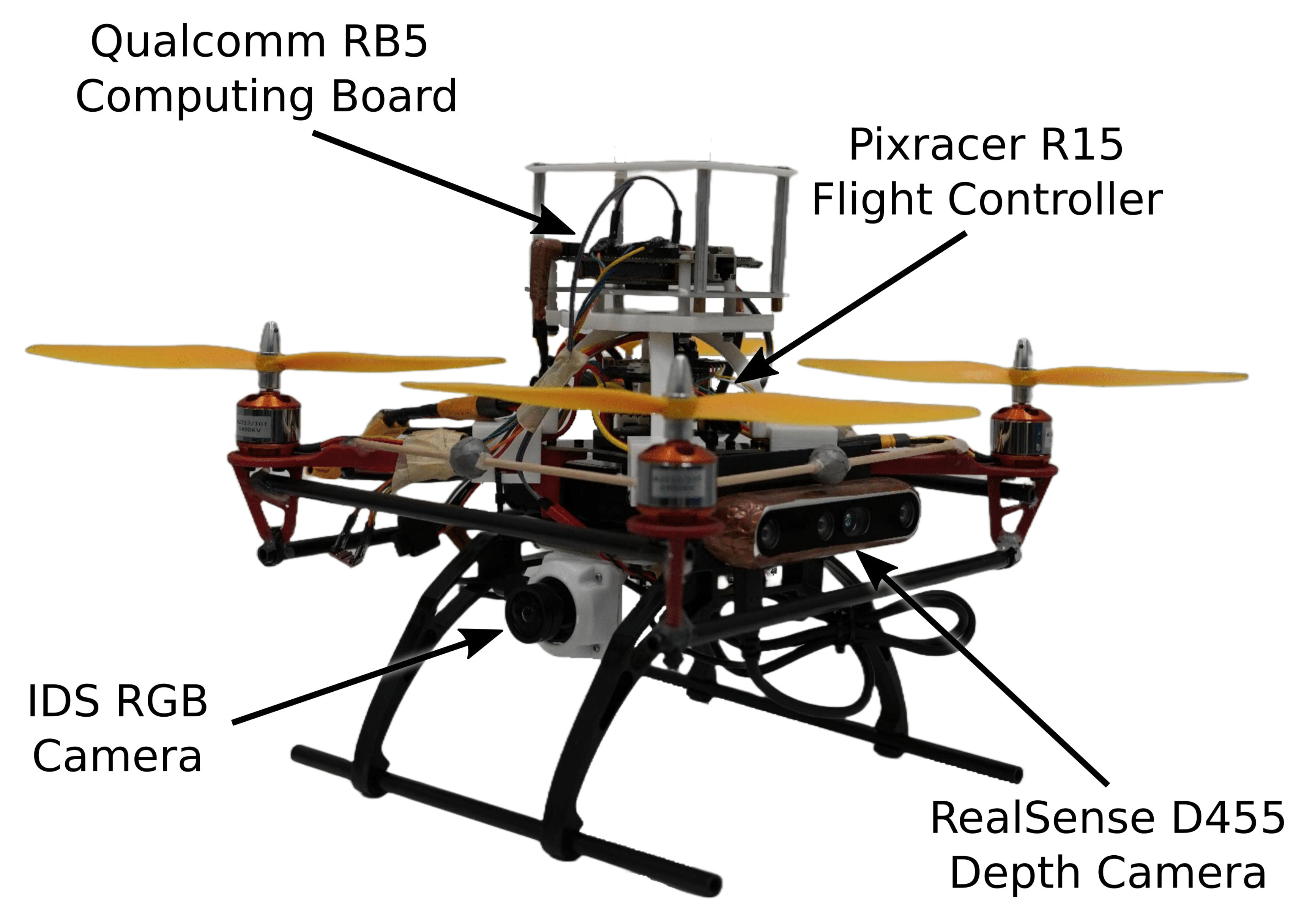

We present a conceptual framework for an autonomous safety mechanism designed to enhance the reliability of Unmanned Aerial Vehicles (UAVs) that use Visual-Inertial Odometry (VIO) for state estimation. As UAVs increasingly interact with the public, such safety mechanisms are crucial to reducing the likelihood and severity of accidents. VIO drift, which occurs when accumulated estimation errors cause discrepancies between the UAV’s perceived and actual position, poses a significant risk to safe operation. To address this challenge, we propose a Kalman filter-based approach for detecting VIO drift events. Upon detection, the envisioned safety mechanism is designed to adjust state estimation by integrating onboard gyroscope measurements and thrust commands for short durations, aiming to enhance stability and prevent potential crashes before initiating a controlled landing. While this framework provides the foundation for a real-time safety mechanism, the implementation and experiment focus on validating the drift detection component in an offline setting using real UAV flight data. The results demonstrate the effectiveness of the detection method in identifying VIO drift scenarios, highlighting its potential for future real-time applications.

|

|

arXiv |

Video |

Poster |

Abstract

We present an autonomous aerial system for safe and efficient through-the-canopy fruit counting. Aerial robot applications in large-scale orchards face significant challenges due to the complexity of fine-tuning flight paths based on orchard layouts, canopy density, and plant variability. Through-the-canopy navigation is crucial for minimizing occlusion by leaves and branches but is more challenging due to the complex and dense environment compared to traditional over-the-canopy flights. Our system addresses these challenges by integrating: i) a high-fidelity simulation framework for optimizing flight trajectories, ii) a low-cost autonomy stack for canopy-level navigation and data collection, and iii) a robust workflow for fruit detection and counting using RGB images. We validate our approach through fruit counting with canopy-level aerial images and by demonstrating the autonomous navigation capabilities of our experimental vehicle.

|

|

Publisher |

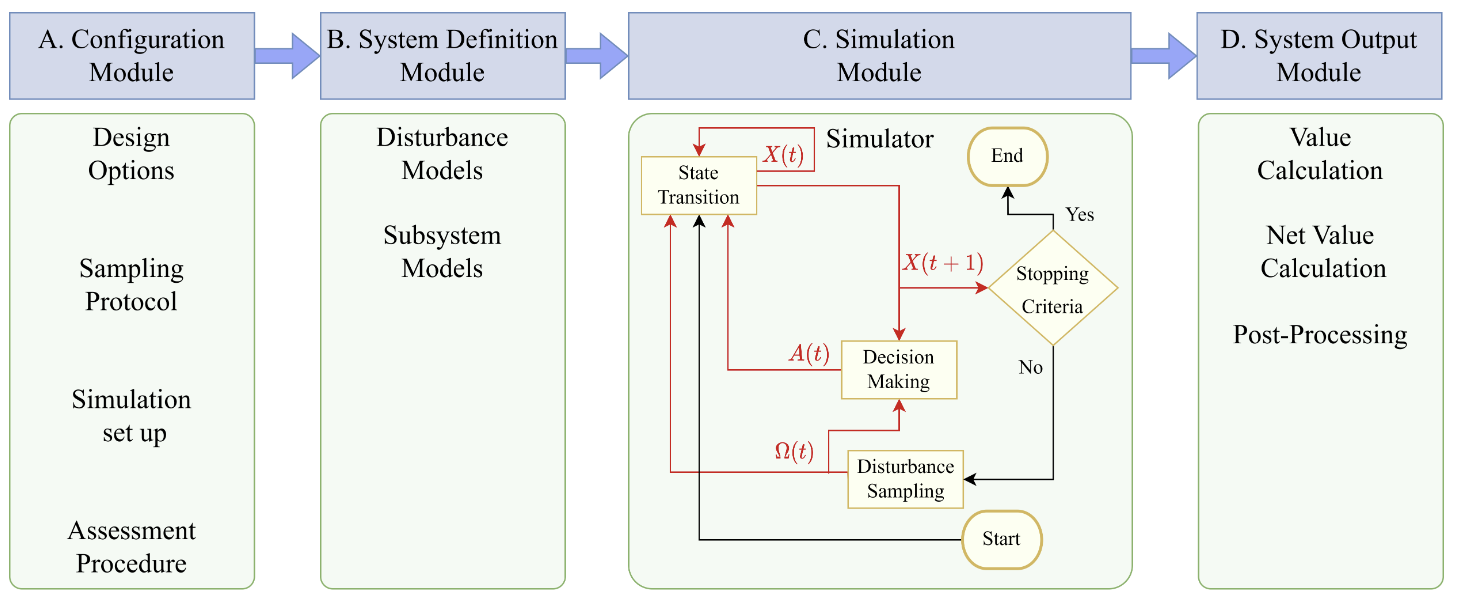

Abstract

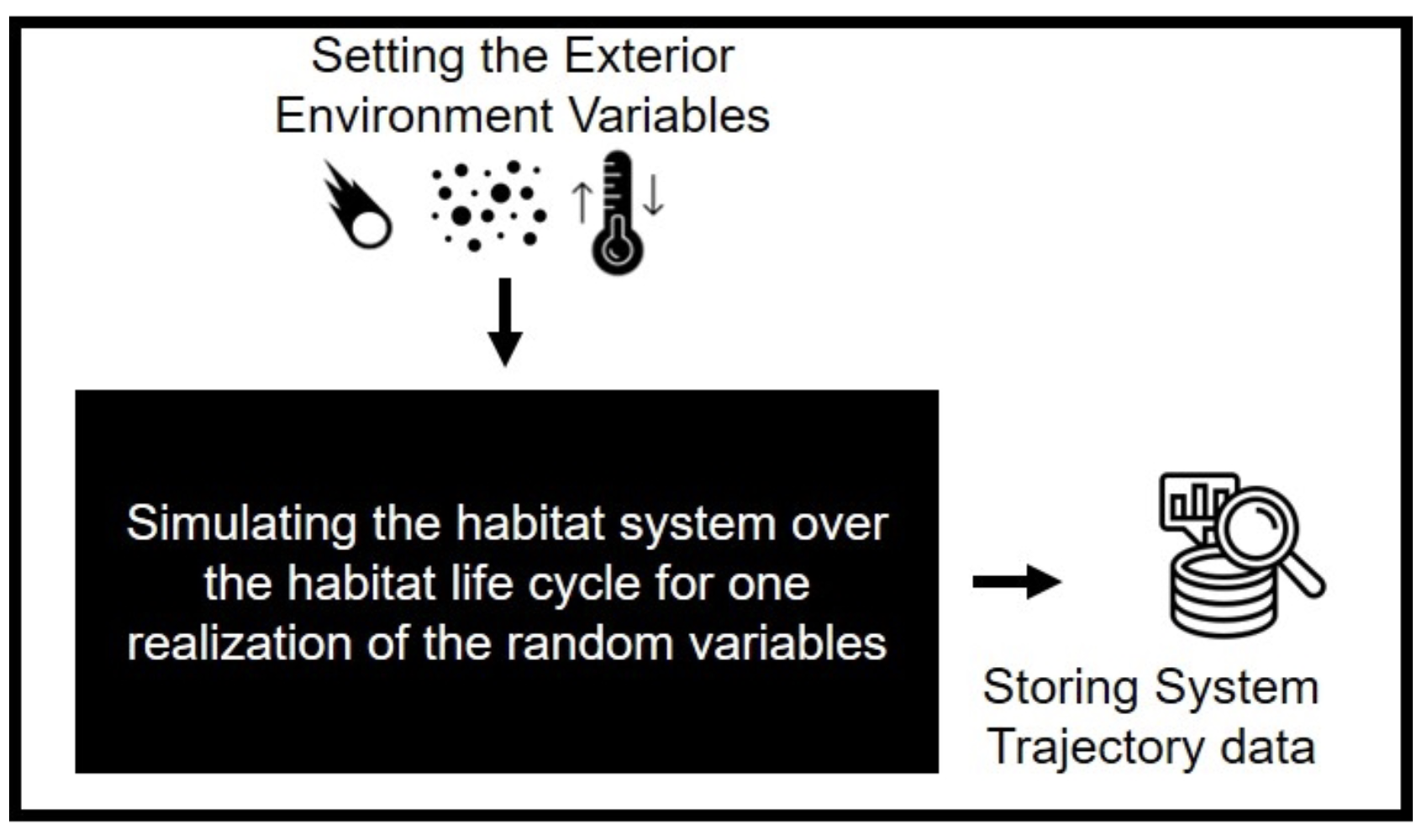

Resilience is a vital consideration for designing and operating a deep space habitat system. The numerous hazards that may affect a deep space habitat and its crew during its lifecycle need to be considered early in the design. Trade-off studies are the typical method used to assess the cost and value of different design choices. Here we develop a modular dynamic computational framework intended for rapid simulation and evaluation of the resilience of different system configurations. The framework uses a system-level phenomeno-logical Markov model of the habitat systems, enabling us to assess multiple habitat configurations and evaluate their performance in the presence of several hazards and user-defined control policies. System fault detection and repairs are modeled. External disturbances, including meteorites impact, temperature fluctuations, and dust, are modeled based on the lunar environment, envisioning a deep space habitat design. We use a reflexive health management subsystem that prioritizes recovery actions based on the robotic agent’s availability to close the loop. In addition to performance, a resilience metric is included to quantify the system’s resilience over the design lifecycle. We illustrate the use of the framework for supporting early-stage design decisions of a habitat system. Our case study focuses on designing the power generation system considering cost and energy efficiency.

|

|

Featured in IEEE Spectrum |

arXiv |

Video |

Abstract

We propose a novel human-drone interaction paradigm where a user directly interacts with a drone to light-paint predefined patterns or letters through hand gestures. The user wears a glove which is equipped with an IMU sensor to draw letters or patterns in the midair. The developed ML algorithm detects the drawn pattern and the drone light-paints each pattern in midair in the real time. The proposed classification model correctly predicts all of the input gestures. The DroneLight system can be applied in drone shows, advertisements, distant communication through text or pattern, rescue, and etc. To our knowledge, it would be the worlds first human-centric robotic system that people can use to send messages based on light-painting over distant locations (drone-based instant messaging). Another unique application of the system would be the development of vision-driven rescue system that reads light-painting by person who is in distress and triggers rescue alarm.

|

|

arXiv |

Video |

Abstract

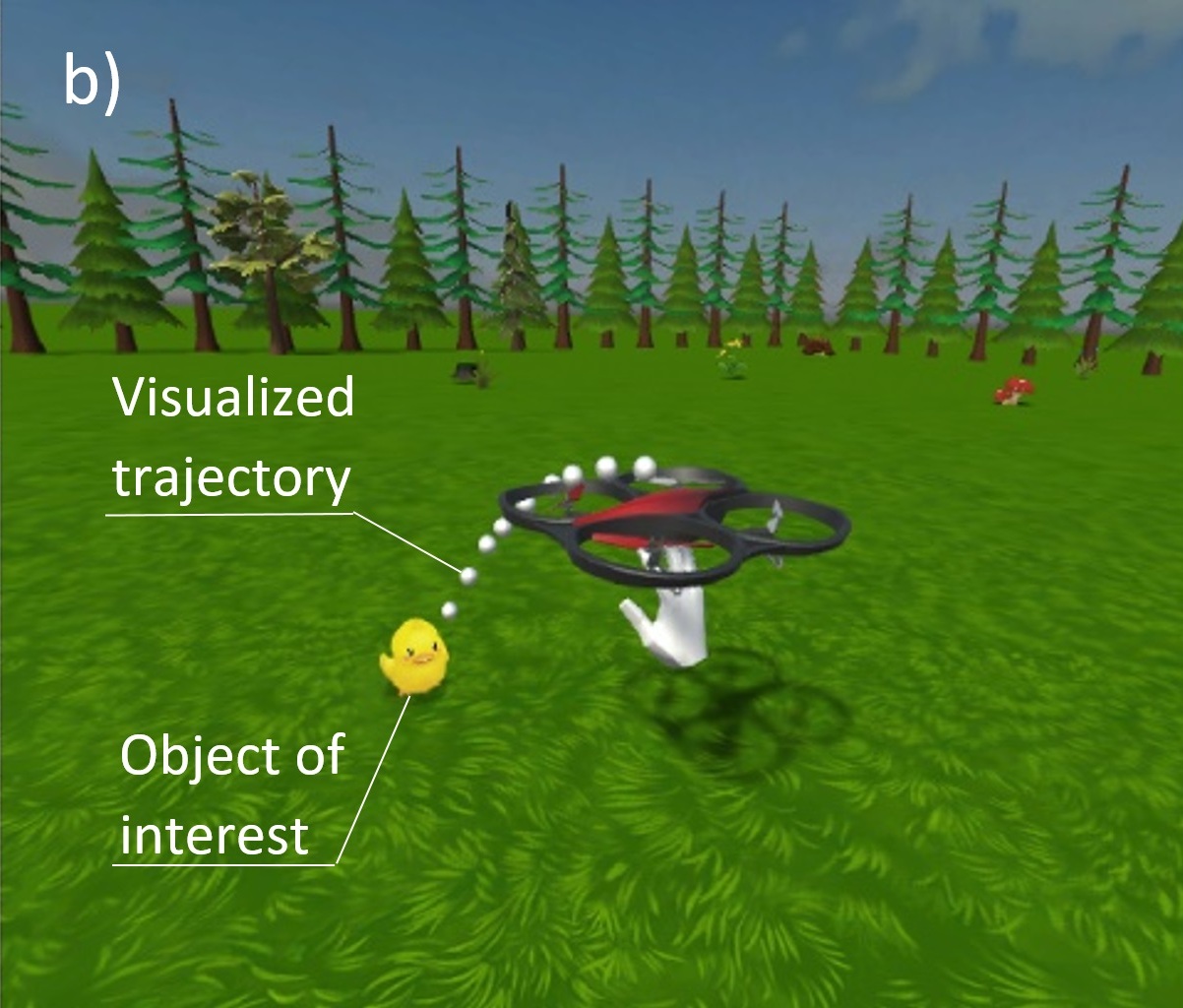

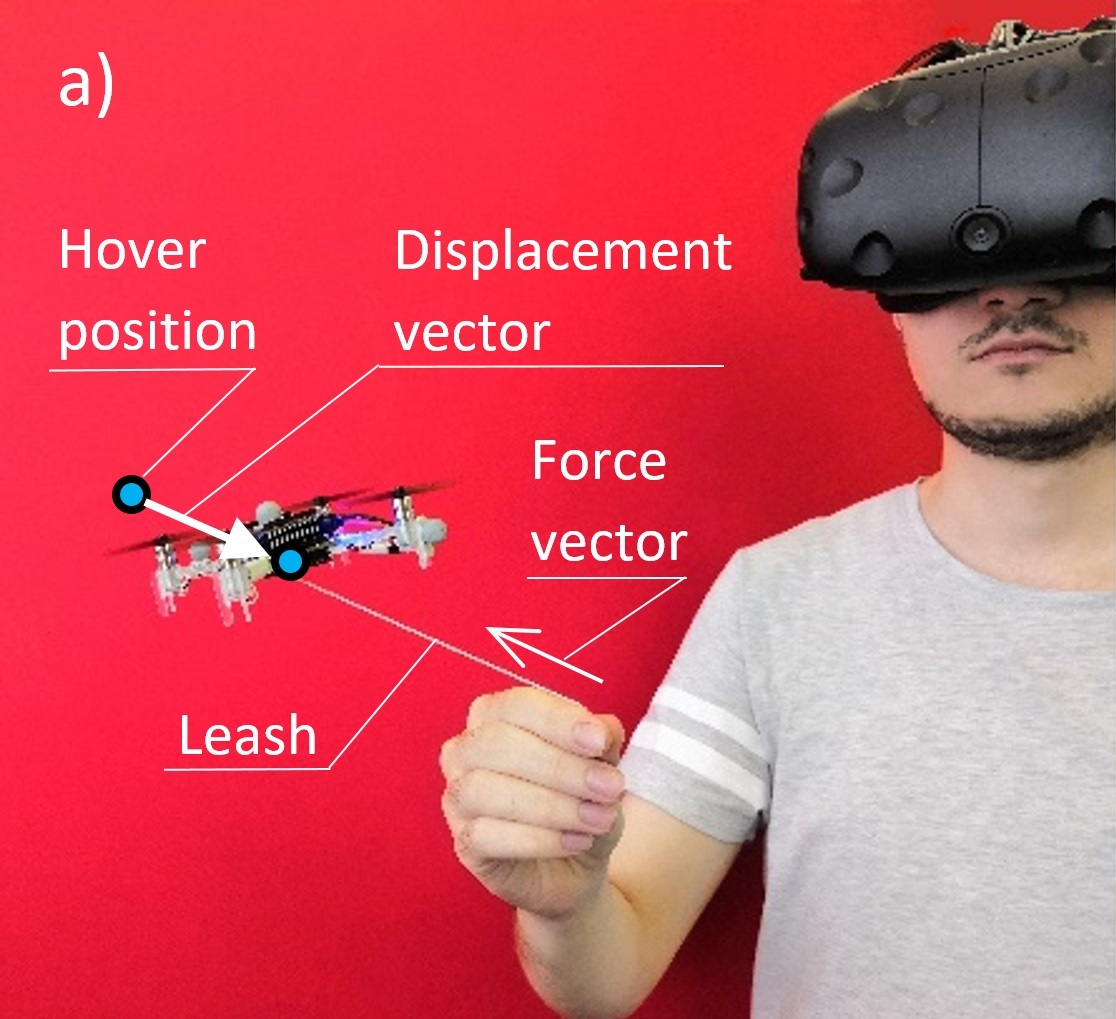

We propose SlingDrone, a novel Mixed Reality interaction paradigm that utilizes a micro-quadrotor as both pointing controller and interactive robot with a slingshot motion type. The drone attempts to hover at a given position while the human pulls it in desired direction using a hand grip and a leash. Based on the displacement, a virtual trajectory is defined. To allow for intuitive and simple control, we use virtual reality (VR) technology to trace the path of the drone based on the displacement input. The user receives force feedback propagated through the leash. Force feedback from SlingDrone coupled with visualized trajectory in VR creates an intuitive and user friendly pointing device. When the drone is released, it follows the trajectory that was shown in VR. Onboard payload (e.g. magnetic gripper) can perform various scenarios for real interaction with the surroundings, e.g. manipulation or sensing. Unlike HTC Vive controller, SlingDrone does not require handheld devices, thus it can be used as a standalone pointing technology in VR.

|

|

arXiv |

Video |

Abstract

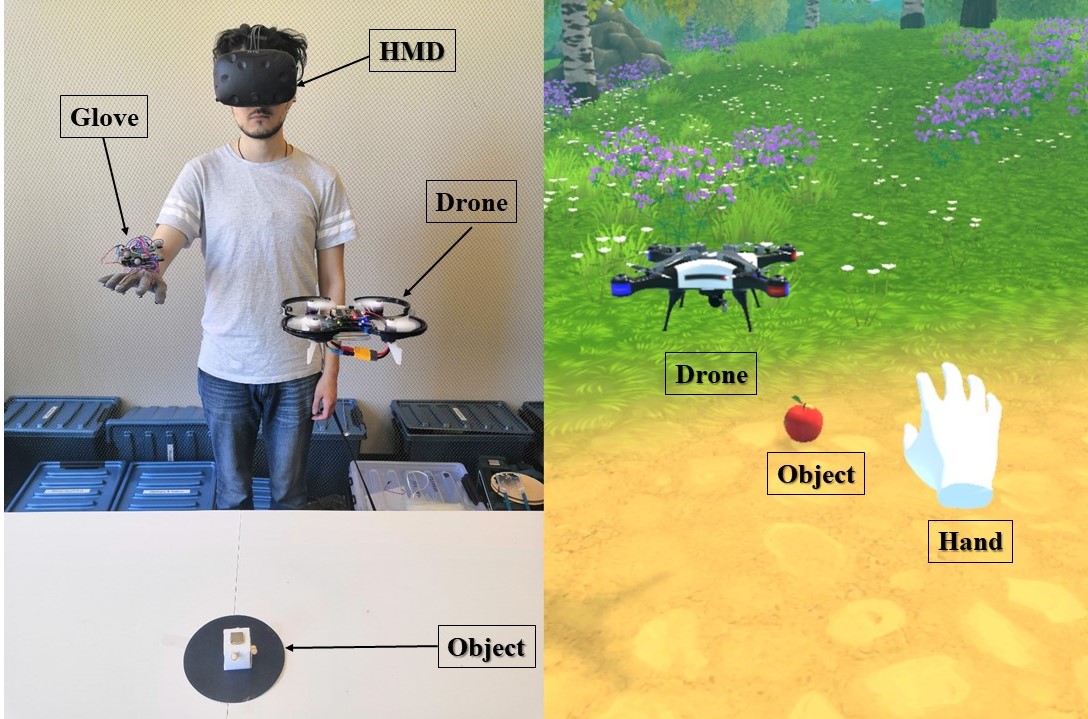

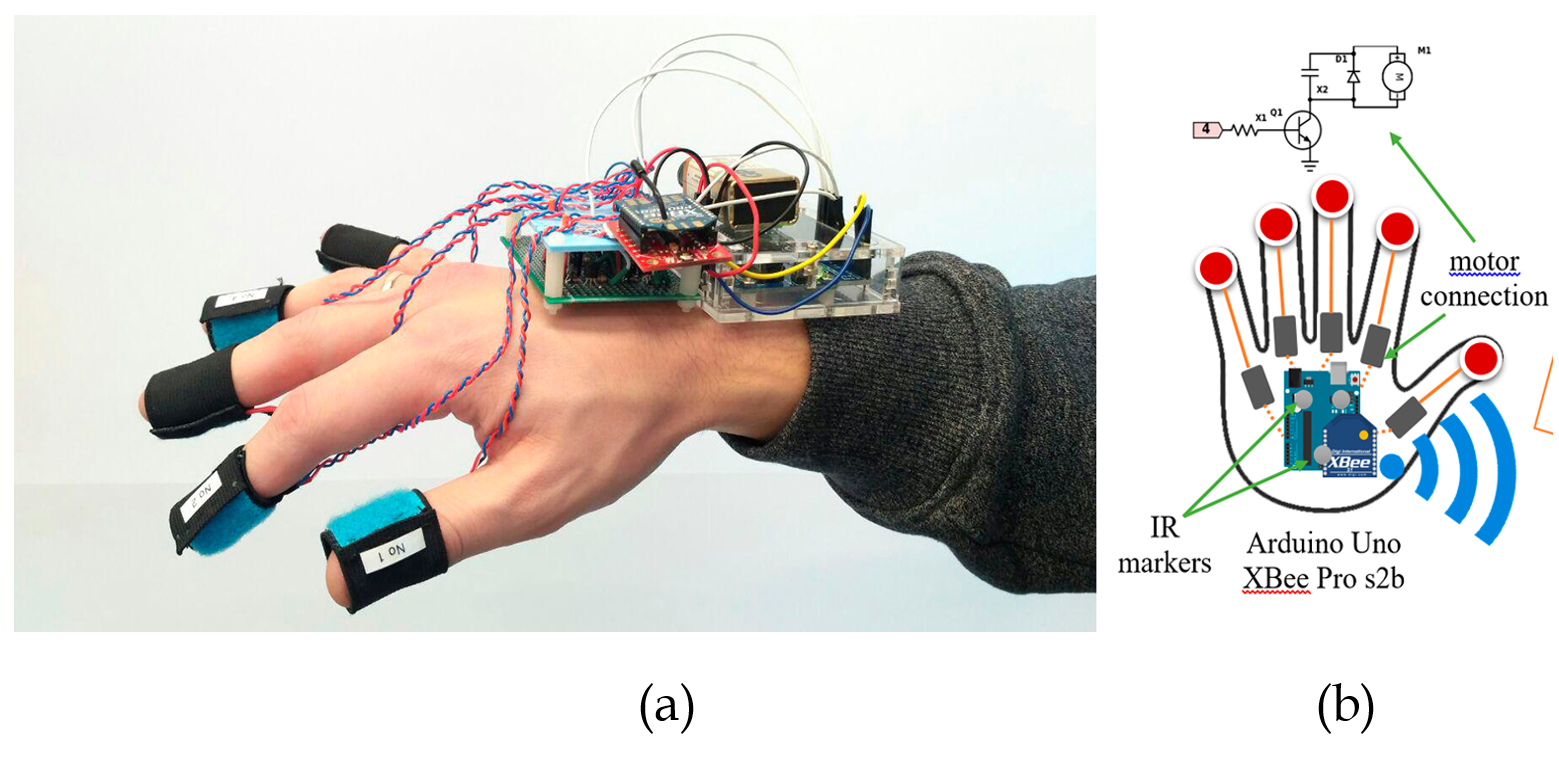

We report on the teleoperation system DronePick which provides remote object picking and delivery by a human-controlled quadcopter. The main novelty of the proposed system is that the human user continuously gets the visual and haptic feedback for accurate teleoperation. DronePick consists of a quadcopter equipped with a magnetic grabber, a tactile glove with finger motion tracking sensor, hand tracking system, and the Virtual Reality (VR) application. The human operator teleoperates the quadcopter by changing the position of the hand. The proposed vibrotactile patterns representing the location of the remote object relative to the quadcopter are delivered to the glove. It helps the operator to determine when the quadcopter is right above the object. When the ``pick'' command is sent by clasping the hand in the glove, the quadcopter decreases its altitude and the magnetic grabber attaches the target object. The whole scenario is in parallel simulated in VR. The air flow from the quadcopter and the relative positions of VR objects help the operator to determine the exact position of the delivered object to be picked. The experiments showed that the vibrotactile patterns were recognized by the users at the high recognition rates: the average 99\% recognition rate and the average 2.36s recognition time. The real-life implementation of DronePick featuring object picking and delivering to the human was developed and tested.

|

|

Publisher |

Video |

Abstract

To achieve a smooth and safe guiding of a drone formation by a human operator, we propose a novel interaction strategy for a human–swarm communication, which combines impedance control and vibrotactile feedback. The presented approach takes into account the human hand velocity and changes the formation shape and dynamics accordingly using impedance interlinks simulated between quadrotors, which helps to achieve a natural swarm behavior. Several tactile patterns representing static and dynamic parameters of the swarm are proposed. The user feels the state of the swarm at the fingertips and receives valuable information to improve the controllability of the complex formation. A user study revealed the patterns with high recognition rates. A flight experiment demonstrated the possibility to accurately navigate the formation in a cluttered environment using only tactile feedback. Subjects stated that tactile sensation allows guiding the drone formation through obstacles and makes the human–swarm communication more interactive. The proposed technology can potentially have a strong impact on the human–swarm interaction, providing a higher level of awareness during the swarm navigation.

|

|

arXiv |

Video |

Abstract

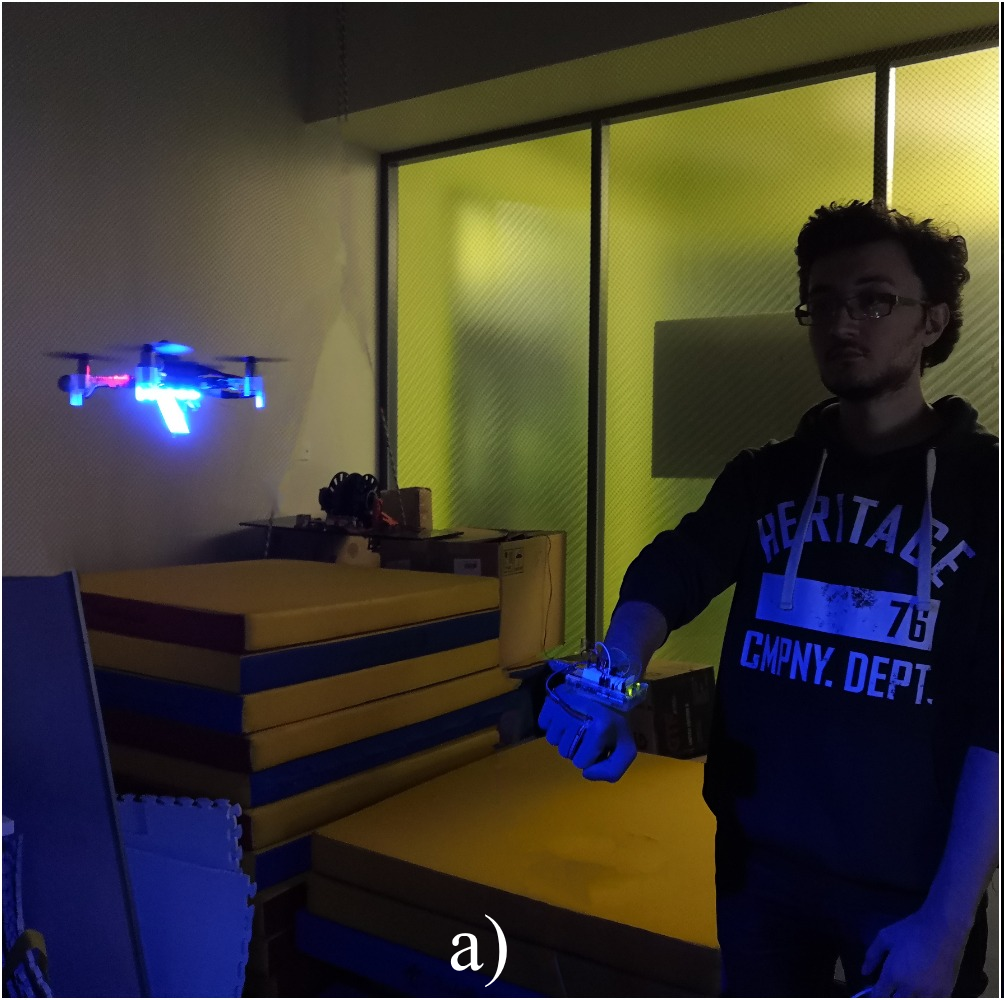

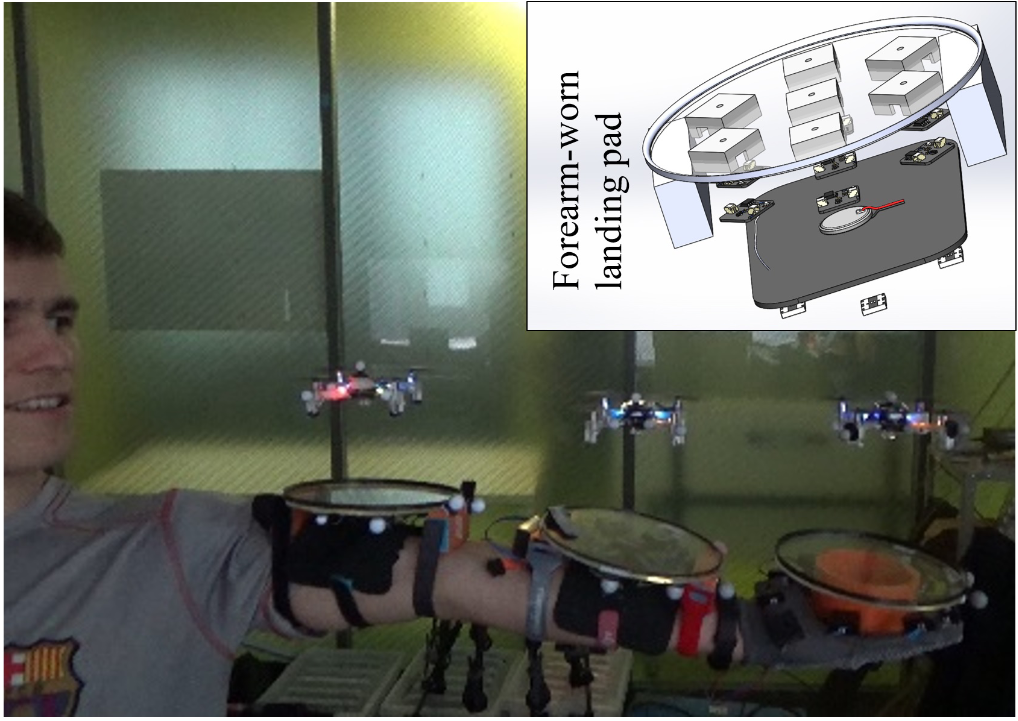

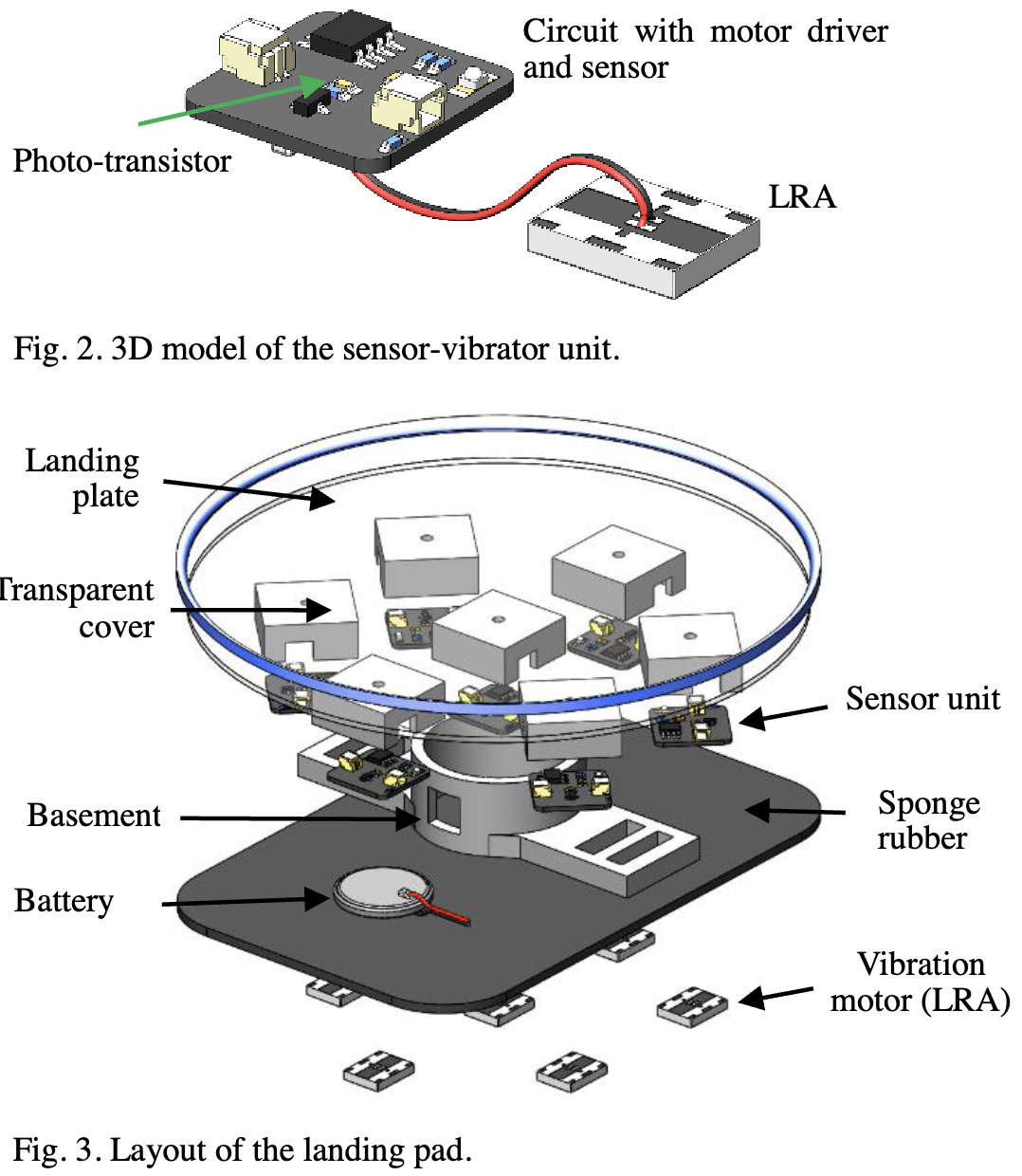

We propose a novel system SwarmCloak for landing of a fleet of four flying robots on the human arms using light-sensitive landing pads with vibrotactile feedback. We developed two types of wearable tactile displays with vibromotors which are activated by the light emitted from the LED array at the bottom of quadcopters. In a user study, participants were asked to adjust the position of the arms to land up to two drones, having only visual feedback, only tactile feedback or visual-tactile feedback. The experiment revealed that when the number of drones increases, tactile feedback plays a more important role in accurate landing and operator’s convenience. An important finding is that the best landing performance is achieved with the combination of tactile and visual feedback. The proposed technology could have a strong impact on the human-swarm interaction, providing a new level of intuitiveness and engagement into the swarm deployment just right from the skin surface.

|

|

Publisher |

Abstract

The dynamics of systems of systems often involve complex interactions among the individual systems, making the implications of design choices challenging to predict. Design features in such systems may trigger unexpected behaviors or result in large variations in safety, performance or resilience. To provide a means of simulating such systems for aiding in these decisions, we have developed a prototype tool, the control-oriented dynamic computational modeling tool (CDCM).The CDCM provides rapid simulation capabilities to perform trade studies in systems of systems. The general class of systems of systems that we aim to examine involve multiple hazards, damage, cascading consequences, repair and recovery. We especially focus on systems-of-systems that incorporate a health management system (HMS) that can monitor the state of the habitat and make decisions about actions to take. In this paper we describe the features of the CDCM, the architecture we devised for simulation of systems-of-systems, the unique functionalities of this tool, and we provide a demonstration of the capabilities by performing two illustrative examples. We articulate the use of this tool for making early design decisions and demonstrate its use for trade studies that consider a model of a deep space habitat. We also share some experiences and lessons that may be useful for others seeking to address similar problems.

|

|

arXiv |

Abstract

For the human operator, it is often easier and faster to catch a smallsize quadrotor right in the midair instead of landing it on a surface.However, interaction strategies for such cases have not yet beenconsidered properly, especially when more than one drone has tobe landed at the same time. In this paper, we propose a novelinteraction strategy to land multiple robots on the human handsusing vibrotactile feedback. We developed a wearable tactiledisplay that is activated by the intensity of light emitted from anLED ring on the bottom of the quadcopter. We conductedexperiments, where participants were asked to adjust the positionof the palm to land one or two vertically-descending drones withdifferent landing speeds, by having only visual feedback, onlytactile feedback or visual-tactile feedback. We conducted statisticalanalysis of the drone landing positions, landing pad and humanhead trajectories. Two-way ANOVA showed a statisticallysignificant difference between the feedback conditions.Experimental analysis proved that with an increasing number ofdrones, tactile feedback plays a more important role in accuratehand positioning and operator’s convenience. The most preciselanding of one and two drones was achieved with the combinationof tactile and visual feedback.

|

|

arXiv |

Video |

Abstract

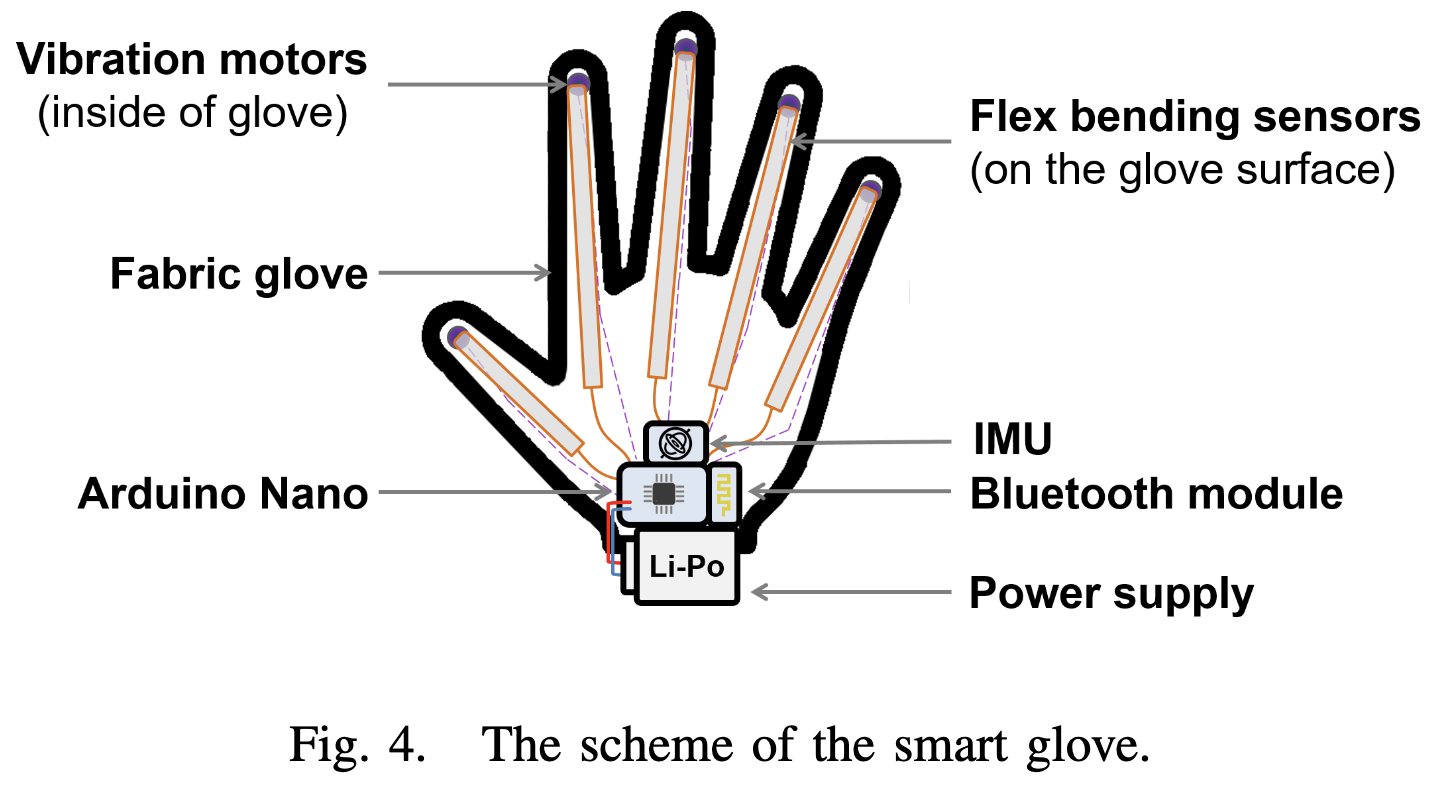

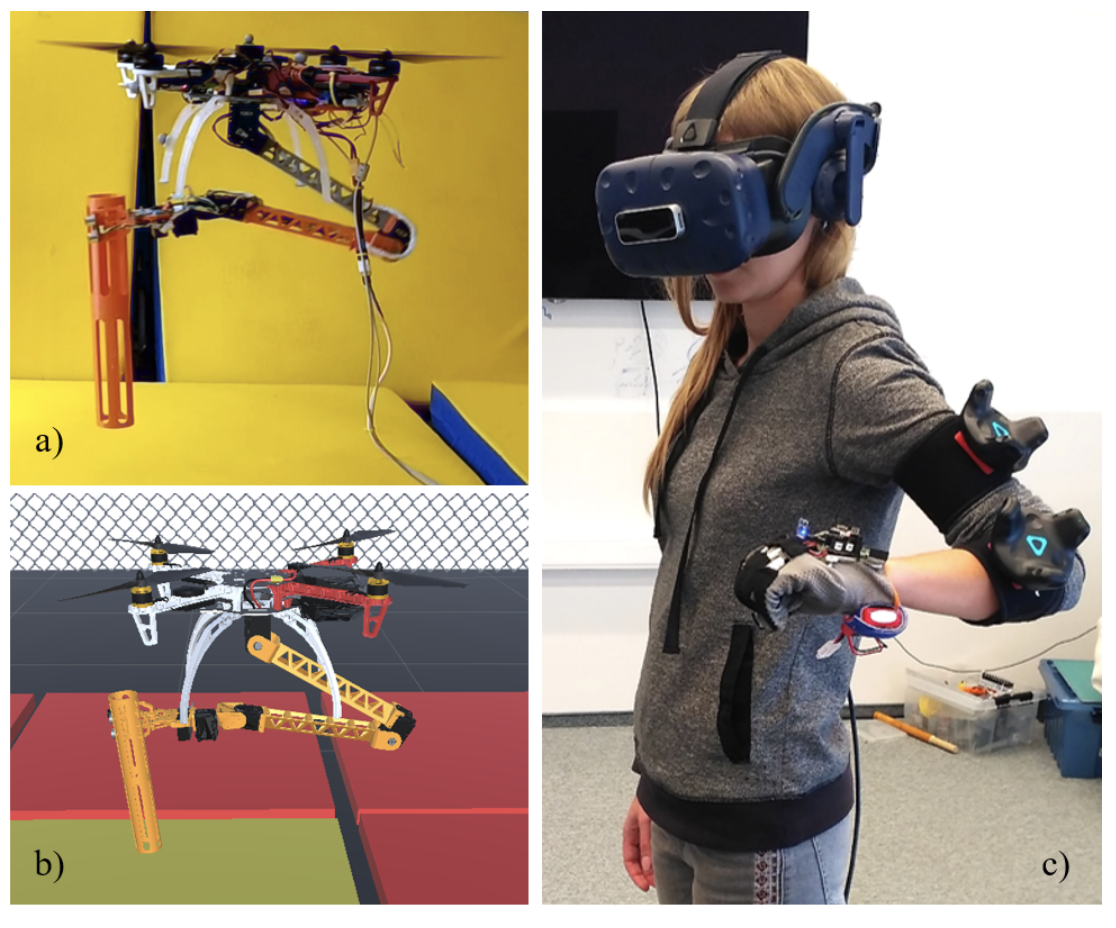

Drone application for aerial manipulation is tested in such areas as industrial maintenance, supporting the rescuers in emergencies, and e-commerce. Most of such applications require teleoperation. The operator receives visual feedback from the camera installed on a robot arm or drone. As aerial manipulation requires delicate and precise motion of robot arm, the camera data delay, narrow field of view, and blurred image caused by drone dynamics can lead the UAV to crash. The paper focuses on the development of a novel teleoperation system for aerial manipulation using Virtual Reality (VR). The controlled system consists of UAV with a 4-DoF robotic arm and embedded sensors. VR application presents the digital twin of drone and remote environment to the user through a headmounted display (HMD). The operator controls the position of the robotic arm and gripper with VR trackers worn on the arm and tracking glove with vibrotactile feedback. Control data is translated directly from VR to the real robot in realtime. The experimental results showed a stable and robust teleoperation mediated by the VR scene. The proposed system can considerably improve the quality of aerial manipulations. |

Teaching & ServicesTeaching

Mentoring

Academic Service

|